09/12/2023

Enterprise

Securing AI Is The Next Big Platform Opportunity

Building with AI presents a new class of security challenges, creating a new platform opportunity

Every new technology presents huge opportunities for innovation and positive change; generative AI may be the single greatest example of that. But each step-function change in technology – an emergence of a new building block like AI for instance – results in a new set of challenges around security. We’ve seen this before: The rise of SaaS led to CASB (Cloud Access Security Broker) and Cloud Security companies such as Netskope and Zscaler. The rise of public cloud led to CSPM (Cloud Security Posture Management) companies such as Wiz. The increasing prevalence of AI is creating a similar opportunity: We expect the rise of a new category of platforms to secure AI.

At a CIO event we hosted recently in New York, we had around 200 IT decision makers in the audience. When asked if they had blocked ChatGPT access, a number of hands went up. This shows how quickly the intersection of cyber security and AI has become top of mind for IT leaders as compared to just a year ago, when ML model security was still a technical space.

Increased risk surface area

AI presents new opportunities for adversaries. It expands the enterprise attack surface, introduces novel exploits, and increases complexity for security professionals.

As enterprises train their own AI models, they risk exposing proprietary data to the outside world or having the data altered in ways that make the model’s predictions inaccurate. Public-facing AI models could be manipulated to produce the wrong results – approving a fraudulent application for a loan, for example. Using a large language model (LLM) to write software could produce code with subtle but exploitable flaws. There is increasing recognition of these challenges – a greenfield opportunity for founders within the cyber community.

The AI Threat Landscape

To categorize threats to AI systems, it helps to think about the following high-level picture:

Here are some of the common threats we’re seeing play out in the generative AI space:

- Prompt injection: By crafting prompts in a careful way, an attacker could induce an LLM to reveal confidential information or engage in unintended behavior. Last February, for example, a researcher at Stanford used a prompt injection attack on Bing Chat to reveal, and then change, its operating instructions.

- Dataset poisoning: An adversary who gains access to machine learning training data could tamper with it to degrade the performance of the model or cause it to produce inaccurate results. In March, researchers at Cornell published a paper showing how to poison commonly used training datasets with minimal effort or expense.

- Data leakage: Training data might inadvertently contain proprietary or sensitive information, which can be accidently revealed to other users of the model. We’ve already seen examples of this in the wild, when early versions of GitHub Copilot accidentally revealed sensitive API keys, or when ChatGPT leaked internal source code uploaded to it by engineers at Samsung.

- Insecure code generation: An LLM could write code using examples of older code that had undiscovered vulnerabilities. This could result in poor performance or flaws exploited by adversaries. An April 2023 paper published by computer scientists at the University of Quebec noted that code produced by ChatGPT “fell well below minimal security standards.”

- Reputational risk: Models that generate inaccurate or offensive responses could damage the reputation of the companies that use them. The most notorious example of this occurred in 2016, when Microsoft released an AI chatbot on Twitter that began engaging in racist conversations.

- Copyright violations: Models trained on publicly available data or images run the risk of violating copyrights in the answers they produce. OpenAI and Meta have already been sued for allegedly violating the rights of artists whose material was used in training their models.

In addition to these attack vectors, there are now a number of freely available open-source tools that can be used to probe AI models for vulnerabilities and/or exploit them. For example:

- Counterfit: Automated security assessment for ML models

- Adversarial Robustness Toolbox: Library for adversarial testing of ML models

- Augly: Focused on building adversarial robustness.

- Prompt Injection Demonstrations: New ways of breaking app-integrated LLMs

While these tools are intended to aid security professionals in stress-testing AI models, they could also be weaponized and used to attack the models by ‘script kiddies’ with little data science expertise.

Regulations and Standards Bodies

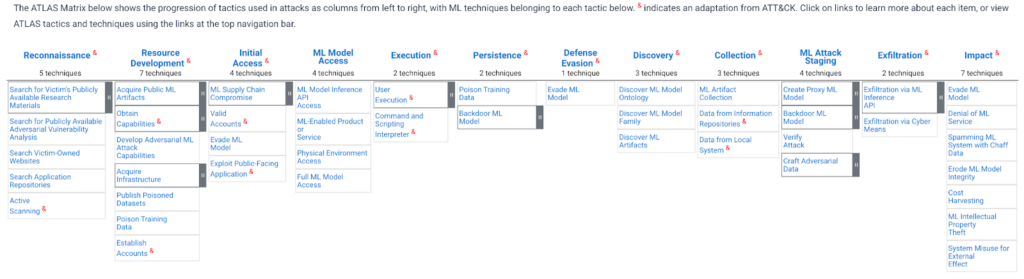

Organizations such as MITRE Atlas (Adversarial Threat Landscape for Artificial-Intelligence Systems) are already focused on identifying these threat vectors. From Mitre’s ATLAS framework:

We expect in time they’ll formulate frameworks for protection and mitigation as well – some of this is already available here.

We also expect governments worldwide will play an active role in shaping AI regulation, which will have an impact on the capabilities that AI security companies will have to build. The EU has been at the forefront of some of this work with its AI Act, the world’s first comprehensive AI law.

A new layer in the security stack

Generative AI represents another layer in the security stack, one that will require new tools to adequately protect. This new layer needs to address security challenges in every category we covered in the previous section:

- Data security: Securing the data used to train and operate AI systems. This includes using strong encryption and access controls.

- Model security: Securing the AI models themselves. This includes using techniques such as model obfuscation and watermarking.

- Prompt/inference security: Securing the interfaces and APIs with which users query the models. This includes API security and request validation.

- System security: Securing the physical systems that AI systems run on. This includes using physical security measures such as firewalls and intrusion detection systems.

Ask the right questions

Founders looking to take advantage of this market opportunity should consider some fundamental questions:

- Which roles or personas within an enterprise most care about AI security? Is it engineering, security, or compliance teams? Which use cases do they care the most about?

- Where do the existing tools fall short? For instance, if uploading data to ChatGPT is a concern, why would an existing DLP or firewalling solution not be enough to address this risk?

- What does an LLM Firewall/Security platform look like? Where would it live in the stack?

- How will AI security be consumed, and who are the decision makers driving this purchase?

Lightspeed has a long history of supporting seminal enterprise security companies. We believe AI represents a huge opportunity for innovative cybersecurity solutions. If you’re interested in talking through any of these issues, please reach out!

Authors