Domain-specific apps offer the greatest ROI for enterprise AI.

Technology adoption is inherently cyclical, and no technology has gone through more up-and-down cycles than artificial intelligence. After enduring multiple long AI winters, the technology is now enjoying a red hot summer that shows no sign of cooling.

Nearly all of the energy and activity surrounding the technology can be traced to the rise of generative AI — and, more specifically, to the debut of OpenAI’s ChatGPT 3 in November 2022 just 18 months ago. Since then, Gen AI has entered popular culture in a big way, introducing a number of new terms to our vocabularies — including prompt engineers, deep fakes, and AI girlfriends. By now, even your grandparents have heard of ChatGPT.

Though this “overnight sensation” was actually years in the making, its sudden and impactful emergence put some of the world’s biggest technology companies on the backfoot.

Since then, companies like Amazon, Google, and Meta have been racing to catch up, with varying levels of success (and a few notable missteps).

Gen AI’s emergence also opened the floodgates to an entire ecosystem of startups, ranging from large language models (LLMs) to enablement infrastructure platforms and an ever-expanding number of consumer, enterprise, and sector-specific applications.

In short, the AI industry is evolving rapidly. Some of the companies grabbing headlines today may not be around in a few years. Equally, it’s plausible that the startups that will play a formative role in shaping this nascent industry may not yet even exist. Our purpose here is to offer a snapshot of the AI market as it stands today, the factors that we anticipate will shape its future, and where we believe the market is headed over the long term.

Part I: A crowded, noisy market

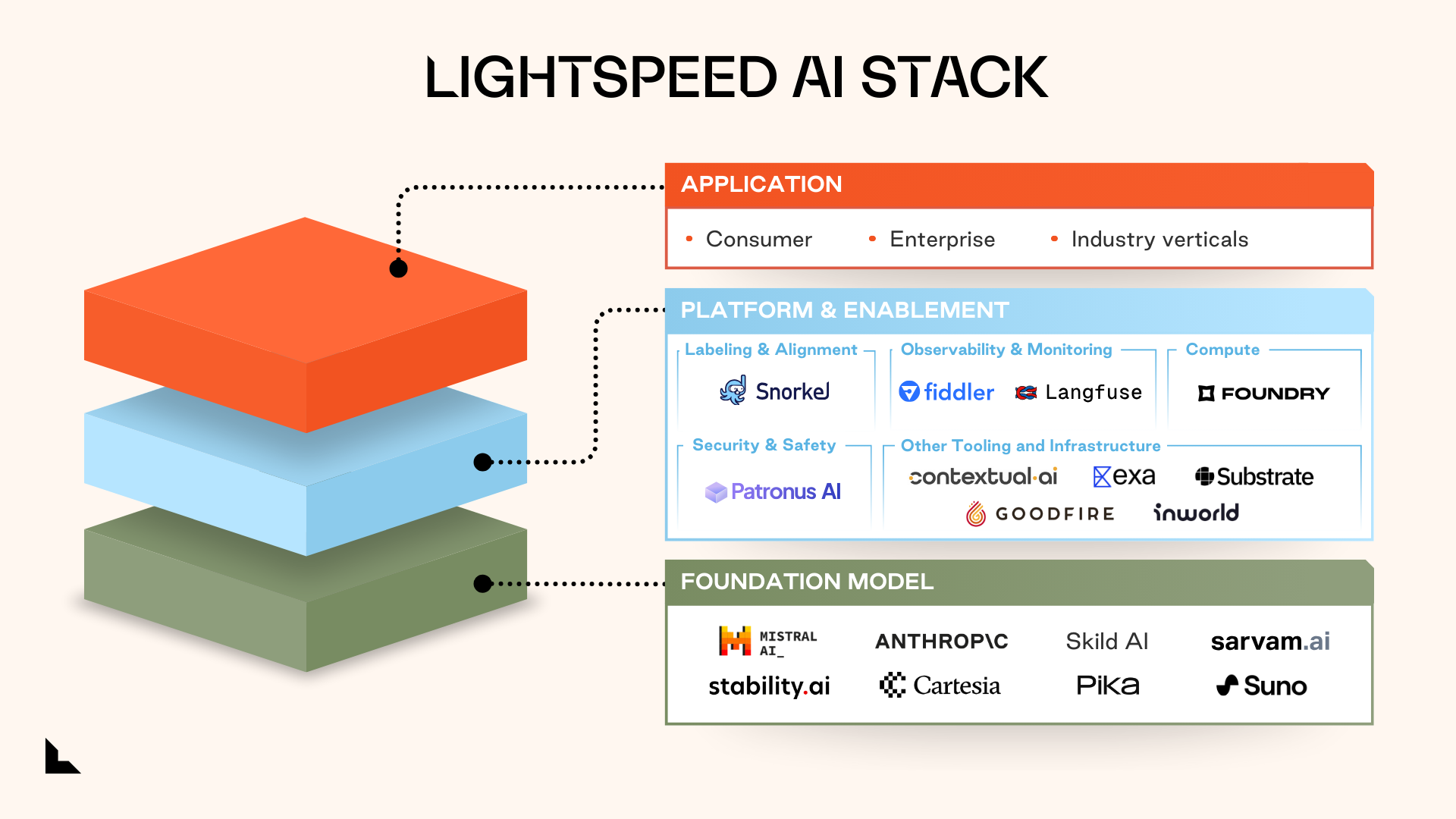

Today there are at least 200+ startups vying for attention in the AI market, spread across two dozen categories. However, they broadly fall into one of three layers: foundation models, enablement platforms, and applications.

Foundation model layer. These are the fundamental building blocks of gen AI applications. Foundation models enable conversational chatbots to parse written or spoken prompts and produce output like text, images, audio, video, or even code.

Over the past 18 months, the number of foundation models has exploded, ranging from proprietary LLMs like OpenAI’s GPT4, Google Gemini, and Anthropic’s Claude to open-source models such as Mistral*, Falcon, and Llama. There are also models trained to generate specific types of output, such as images (Midjourney, Stability.ai*), music and audio (Suno*, Udio), video (Pika Labs*, Sora), and code (Codex, Github Copilot).

Notably, over the last year we’ve seen a rise in small language models (SLMs). These models perform almost as well as their larger counterparts in narrow domains but with lower costs and smaller carbon footprints. SLMs are also trained on smaller, highly curated datasets — making them less prone to problems such as hallucinations and copyright issues and more apt for targeted use cases.

Platform enablement layer. This layer consists of a broad range of middleware platforms that perform essential, albeit less glamorous, tasks. From automating the labeling of massive training datasets to enhancing machine learning security and explainability, these platforms play an often quiet, but critical role in the success of the AI ecosystem. They also help address the industry-wide shortage of GPUs and enable enterprises to develop their own LLMs in a compliant, privacy-first way.

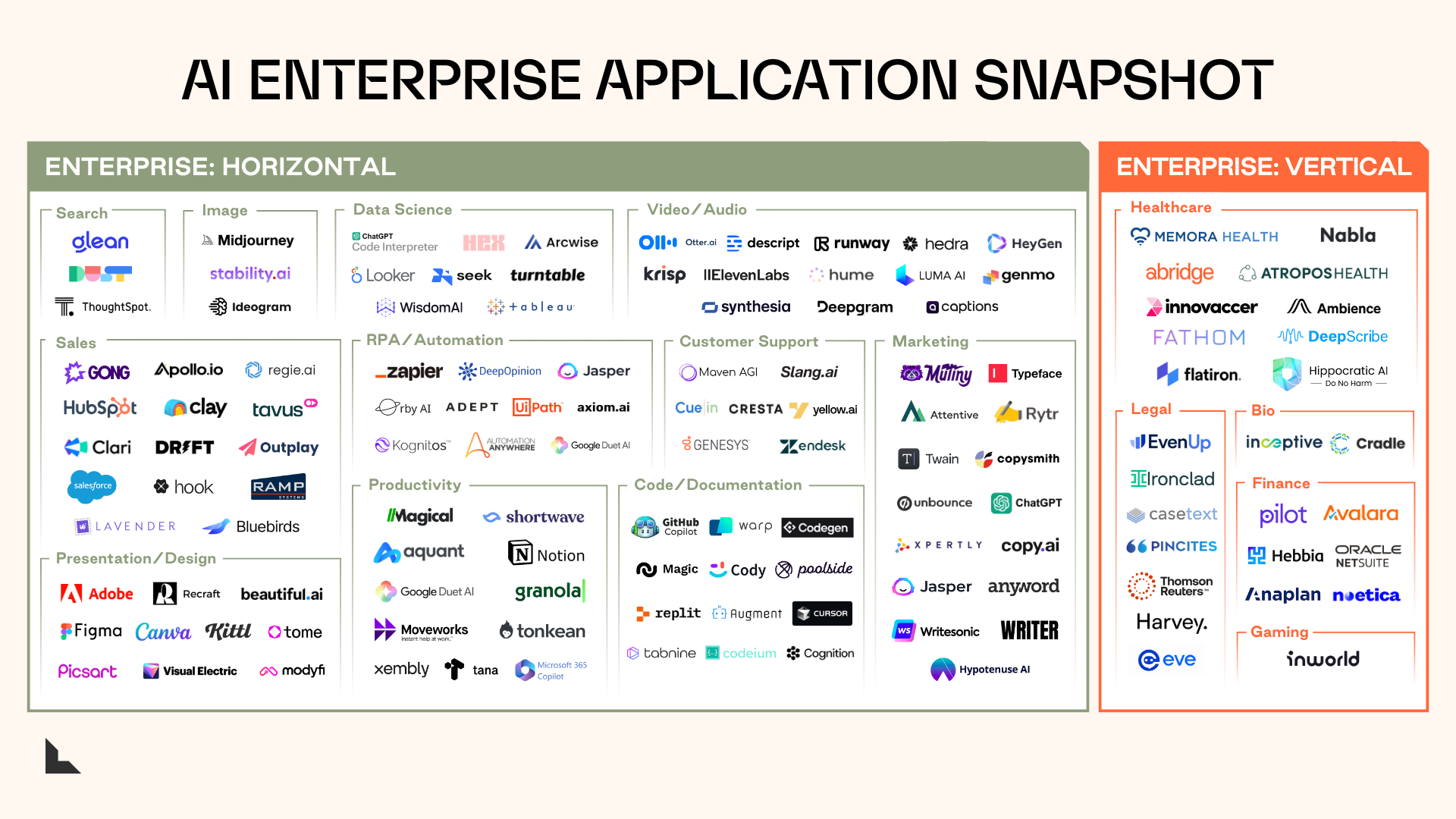

Application layer. The overwhelming majority of AI startups operate at this layer, focusing on the “last mile” between the foundation models and the end user. These applications can be further subdivided into consumer, business, and industry-specific categories.

While consumer-facing apps tend to grab the spotlight, we believe enterprise apps will form the backbone of a scalable and enduring AI market. While we expect more limited, vertical tools will be among the first to gain meaningful traction, end-to-end workflow automation will eventually unlock trillions in value across virtually every sector by fundamentally disrupting entire software categories.

Part II: Our views on enterprise AI

While we recognize the clear potential of AI, we also acknowledge the growing hype. The game is still in the early innings, and getting these models to deliver measurable value to enterprise customers is a work in progress. Until concerns around accuracy, reliability, and consistency are resolved, barriers to broad, enterprise-wide acceptance of AI platforms will remain.

The fluidity of today’s enterprise AI market — where the state of play changes daily, and sometimes even by the hour — means accepting a level of ambiguity in ‘what to expect’ from the future. Still, there are a few solid conclusions we can draw from what we’ve seen so far.

1. Knowledge retrieval will kickstart productivity gains, but thoughtful user interface (UI) will send it into overdrive.

While there has been speculation about AI’s potential to eliminate thousands of knowledge worker jobs, the greatest benefit generative AI will bring to enterprises is augmenting their current workforce, not automating them out of existence. Recent studies predict that roughly eight out of ten American workers will have 10 percent of their jobs taken over by AI, while the rest are likely to see at least half of their current tasks automated.

Overwhelmingly, the fundamental enterprise use case today remains providing easy access to information. Between 80 and 90 percent of all enterprise data is unstructured or semi-structured — emails, chats, video, web pages, and so on. LLMs excel at analyzing this data and making it easily accessible.

Conversational work assistants that offer secure access to enterprise data will make engineers, salespeople, marketing teams, legal departments, and back-office personnel more productive. Over the next five years, we believe using AI could save professionals several hours per week.

The technology could contribute more than $15 trillion to the global economy by 2030, and we think a significant portion of could come from productivity gains.

As a result, human-computer collaboration and intuitive UI will be the model enterprises pursue for the foreseeable future, and point solutions that offer quick wins in specific areas will be the first to be adopted.

2. Tech-averse industries will begin to adopt AI.

Vertical apps such as Abridge* and Ambience (health), Harvey and EvenUp* (legal), Inceptive and Cradle (biotech), Avalara and Anaplan (finance), and other platforms are indicative examples of the power that AI can introduce to industry-specific issues.

As we gain a deeper understanding of the strengths and limitations of LLMs — and the kinds of use cases they map best to — we’ll start to see even more domain-specific uses emerge and dominate the enterprise landscape. In particular, we expect to see generative AI penetrate traditionally software-resistant industries like manufacturing, construction, and pharma — which we believe represents some of the most intriguing investment opportunities.

3. Vertical apps offer the most immediate impact, but the real long-term benefits lie in system integration.

This technology is most powerful when operating within narrowly defined spaces delineated by clear guardrails. Highly tuned models with curated datasets offer measurable ROI while avoiding most of the pitfalls of larger LLMs.

But right now, most enterprises are currently deploying AI in standalone applications. As a result, people tend to obsess too much over individual performance. The more interesting capabilities will emerge when these apps are integrated and work as a cohesive system. We believe the biggest transformation will happen when enterprises shift to Compound AI, combining disparate models into a broader AI architecture.

4. Startups lead now, but incumbents are rising.

The current AI startup landscape is characterized by significant noise, largely because many new ventures offer little in terms of unique innovation. The barriers to entry in the current gen AI space remain rather low, and it’s starting to show. Many early applications don’t contain a lot of original IP; without access to proprietary data or deep domain experience, these ventures are akin to constructing a building on a foundation of quicksand.

This situation is further complicated by established software giants rebranding themselves as AI companies; however, many of these companies are merely appending AI features into their existing products without a substantive integration or vision.

The good news — a lot of really thoughtful, product-minded people have yet to take their first real swing at these questions.

Much like the cloud computing boom, we believe the most promising startups — both today and tomorrow — will combine founders with deep domain expertise with engineers native to building gen AI solutions. In particular, we expect strong product thinking and user-centricity will separate the true innovators from the trend followers.

5. The disruption has only just begun, and the best ideas have yet to emerge.

Most organizations are still in the experimental phase — feeling their way forward but not charging full speed ahead.

Many are still grappling with fundamental questions, such as: Do we have the right kinds of data to make this technology useful? Are there sufficient guardrails in place to head off potential issues around copyright infringement, data leaks, lack of transparency, or potential bias? How can we redefine work to allow our people and this new technology to collaborate without conflict? What will the implications to these applications be when these underlying models reach AGI?

These are exciting times in the gen AI market, but also still early days.

JOIN US

At Lightspeed, we’re always looking for founders who are user- and customer-centric, operate from first principles, and want to build enduring companies that will be here long after the flashier applications have faded from memory.

If you’re interested in connecting with our team, reach out to us at lisa@lsvp.com and nnamdi@lsvp.com.

* Lightspeed portfolio companies

The content here should not be viewed as investment advice, nor does it constitute an offer to sell, or a solicitation of an offer to buy, any securities. Certain statements herein are the opinions and beliefs of Lightspeed; other market participants could take different views.

Authors