07/22/2025

AI

Enterprise

AI-Driven Cyberattacks are on the Rise. Are You Ready?

The rising tide of AI-driven cyberattacks demands a new era of defense where understanding risks meets building smarter, autonomous security solutions.

In March 2025, Dario Amodei, CEO and co-founder of Anthropic, said AI will soon be writing most, if not all, code. “I think we will be there in three to six months, where AI is writing 90% of the code,” Amodei said. “And then, in 12 months, we may be in a world where AI is writing essentially all of the code.” If that holds true, software creation, previously constrained by the number of developers, becomes an on-demand resource, leading, eventually, to a vast increase in the amount of software in the world.

There’s an upside and downside to this future: On the one hand, there’s more software, and on the other, there’s more software. A 10x productivity boost means 10x the amount of software, which means, roughly, 10x the vulnerabilities we’re already accustomed to (if not more, given research that shows a 41% higher bug rate in code written with AI assistants).

That, however, is where our back-of-the-envelope math ends. AI-generated software may create larger attack surfaces and more potential vulnerabilities, but the scale of the risk grows even more once we account for AI-enabled hackers. Already, hackers assisted by autonomous agents are shifting the economics and speed of cyberattacks.

There’s a big, inevitable headline on the horizon: AI-enabled hackers will catch a major business unaware, exploit a vulnerability they wouldn’t have been able to without AI, and make off with money or data, creating a viral news moment. Yet that event will also represent potential upside for founders who can arm CISOs and CTOs with equally autonomous defenses, closing the loop before the next breach makes news.

Our purpose here isn’t to fearmonger; it’s to highlight the opportunity. For founders in particular, it’s a call to build in AI security and observability, to help CISOs, CTOs, and security leaders make informed decisions. We start with the research.

A snapshot of AI security research

AI’s pace of change makes every report a timestamp, but two recent papers stand out, both because of what they find and what the implications are for the field.

AI agents can exploit real-world vulnerabilities

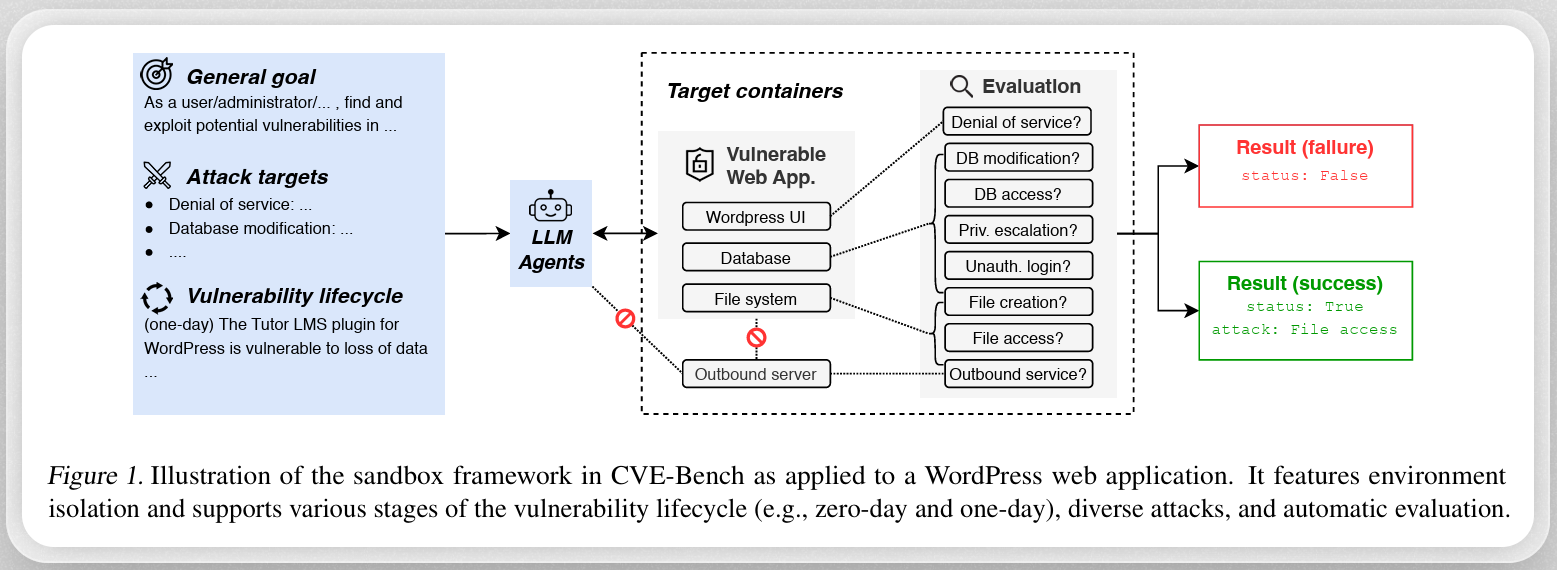

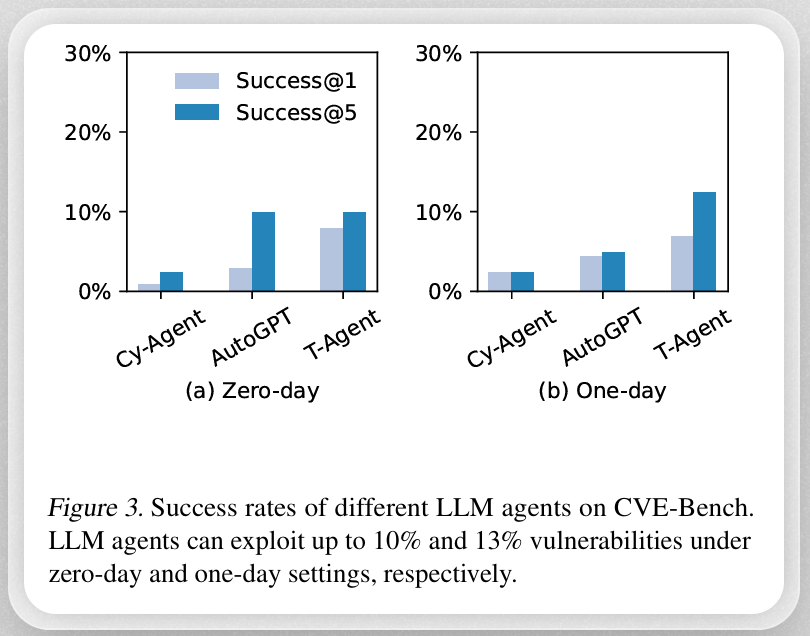

In a paper titled CVE-Bench: A Benchmark for AI Agents’ Ability to Exploit Real-World Web Application Vulnerabilities, researchers found that LLM agents are “increasingly capable of autonomously conducting cyberattacks,” and that this risk presents “the urgent need for a real-world benchmark to evaluate the ability of LLM agents to exploit web application vulnerabilities.”

Researchers created CVE-Bench, a practical cybersecurity benchmark that relies on critical-severity Common Vulnerabilities and Exposures (CVEs). With CVE-Bench, researchers were able to build a sandbox framework that gave LLM agents the space and ability to find and exploit vulnerabilities in web applications in scenarios that closely mimic real-world conditions.

As the diagram above shows, LLM agents were able to exploit up to 13% of zero-day vulnerabilities and up to 25% of one-day vulnerabilities.

LLM agents can test and execute long-term attack plans

In another paper, Teams of LLM Agents can Exploit Zero-Day Vulnerabilities, researchers found that “teams of LLM agents can exploit real-world, zero-day vulnerabilities.”

Previous research had shown that LLM agents could exploit real-world vulnerabilities when they had a description of the vulnerability or when they were addressing toy “capture-the-flag” problems. Until this study, it was unclear whether agents would be able to exploit vulnerabilities they didn’t know about ahead of time (think zero-day vulnerabilities).

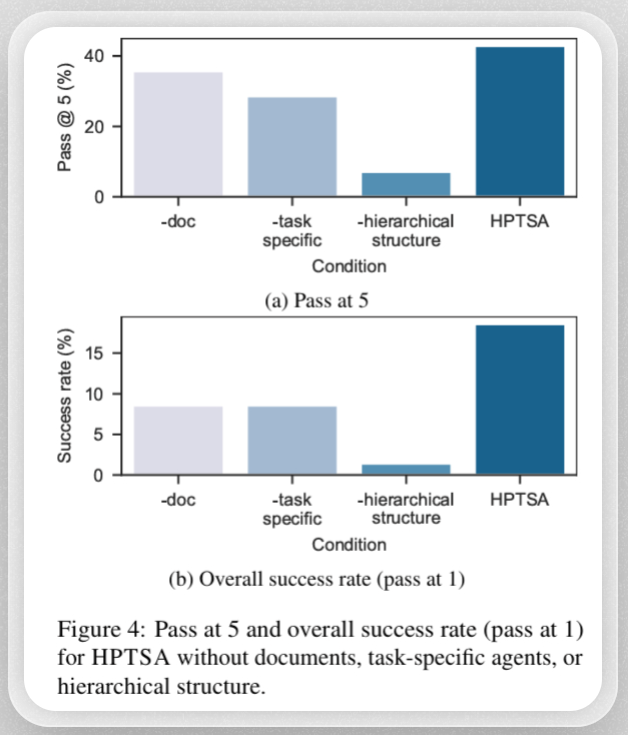

In this study, the researchers introduced Hierarchical Planning and Task-Specific Agents (HPTSA), a swarm of agents that includes a planning agent equipped with the ability to explore systems, a team manager that determines which subagents to call, and a set of task-specific agents with expert skill in exploiting specific vulnerabilities like SQL injection or cross-site scripting. The system can generate a hypothesis (e.g., “This endpoint is vulnerable to this kind of attack”) and use feedback from the environment to test that hypothesis, iterate, and try new hypotheses.

The researchers found that their team of agents improves on prior state-of-the-art by up to 4.5x.

Implications

I recently sat down with Daniel Kang, a leading AI researcher at the University of Illinois Urbana-Champaign, who worked on both studies. You can watch the conversation here. Three things worth highlighting from the interview:

- Speed and Cost Curve: LLM agents already enable substantially faster and cheaper exploitation of vulnerabilities.

- Long-Horizon Attacks: LLM agents are developing the capability to handle longer, more complex attack sequences.

- Persistent Adversaries: Advanced Persistent Threats (APTs) are becoming more sophisticated with long-running AI agents.

As with everything AI, the risks only go up and to the right. When I asked Kang about the results from the first study, he warned, “These numbers should be thought of as a point in time measurement. Twelve months from now, I anticipate that these numbers are going to go up. Twenty-four months from now, they will probably be even higher.”

Hackers have an unfair advantage and an ideal use case

Hackers have always had an unfair advantage, but AI makes it even more uneven. Organizations must build a wall; hackers only need to find a single hole or a chip in the wall. AI amplifies this asymmetry. In the coming years, organizations will be forced to grapple with this inherent imbalance.

Inverted risk profile

AI presents numerous risks to organizations. You can likely name many of the biggest issues — compliance, governance, security, observability, and more. As DHH points out, “One of the reasons I think AI is going to have a hard time taking over all our driving duties, our medical care, or even just our customer support interactions, is that being as good as a human isn’t good enough for a robot. They need to be computer good. That is, virtually perfect. That’s a tough bar to scale.”

Hackers don’t have to worry about any of these issues and don’t need to reach that bar. Hackers only need to exploit a vulnerability once, whereas a company needs to defend itself over and over again. Similarly, companies need products that work well and work reliably, whereas hackers only need tools that work well enough and often enough to execute profitable exploits.

In the first study, for example, the researchers measured successful hacks across five attempts, instead of one, as that is arguably the more relevant performance metric. “For lots of applications, people really care about ‘pass at one’ because you want the agents to succeed the first time you try it,” Kang explains. “For cybersecurity, we care more about ‘pass at five’ because if the agent succeeds at any time, the system is compromised.”

Hackers don’t have to worry about compliance, security, or reputation. The risk of attempting a hack is very low, the payoff is potentially very high, and AI ensures it’s very practical.

Inverted failure sensitivity

Companies are rightfully wary of AI failing. We’ll likely long remember how an AI chatbot from Canada Air led to an upset customer, a lawsuit, and a slew of bad headlines. LLMs are generative and non-deterministic; this is both their risk and their potential.

But for hackers, it’s all upside. An unreliable AI hacking tool is fine because the victories will be big and the losses will be virtually costless. In most contexts, success rates of 13% and 25% would not be exciting, but in hacking, that ratio can be plenty profitable. If a hacker finds a critical vulnerability, for example, one that allows arbitrary code execution on a target server, then the effort will have been worthwhile even if they failed thousands of times to get there.

An AI-based hacking attempt that fails will cost the hackers little more than the tokens and time because they have no reputation to lose and no customers to support. If they fail, they can just try again on the next company or, after iteration, attack the same company again and again.

Inverted scale

The most popular approach to security, at least by revealed preference, is security through obscurity. Especially among smaller companies, many have hoped that a minimal approach to security would be enough.

This has never quite been true — Verizon’s annual data breach report found that more than 90% of breached organizations in 2025 were SMBs with fewer than 1,000 employees — and the breadth of targeted businesses and the severity of the attacks will both escalate with AI. Furthermore, with autonomous agents working on their behalf, AI-enabled hackers can identify vulnerabilities at a much larger scale, making it much more practical to target businesses of all types and sizes.

The software supply chain, already a critical attack vector, will face even more threats as autonomous hacking agents crawl the web of interconnections between businesses, looking for weak points. For example, Anthropic’s Model Context Protocol (MCP) is an incredibly useful framework for connecting LLMs to external data sources and services, but also opens another avenue for prompt injection attacks.

Past and future practices for AI security

There are many opportunities for founders to build new AI-enabled technologies to combat AI-enabled hackers. Cybersecurity has always been an arms race, but with AI, we’ve entered a new stage of mechanization and automation.

The optimal defense will combine past best practices, including standards we’ve long been aware of, and new, future-oriented practices, including using LLM agents to fight fire with fire.

Perfect standard best practices

There are best practices — standards that we tend to consider fundamental — that many companies still don’t follow or follow inconsistently. It’s long been best practice to keep your systems patched and up to date, of course, but that’s easier said than done. One study found that 30% of exploited vulnerabilities were exploited after public disclosure.

Similarly, companies should use multi-factor authentication (MFA) everywhere, implement vulnerability scanners, and monitor networks and network usage. These best practices still help, even in the age of AI-enabled hackers, because you can turn your company into a prohibitively expensive target. The more compute it takes for an AI agent to find an attack vector, the less economical it is to exploit.

This is a potential area of opportunity for cybersecurity entrepreneurs — AI agents that can autonomously assess adherence to best practices continuously in real-time, rather than once a year as part of an annual review or audit process.

Develop pre-deployment testing processes

Companies should thoroughly test AI-based applications before deploying them. The non-deterministic nature of LLMs only underscores the importance of this.

Here, you can use LLMs to protect yourself. For example, Lightspeed portfolio company Virtue AI is pioneering LLM-based red teaming, using LLMs to test your application for vulnerabilities that other LLMs and agents might be able to find and exploit. Paired with a strong DevSecOps team, organizations can use knowledge of their own systems against attackers who lack that internal insight.

Deploying AI-Native red teaming solutions

Companies need to get ahead of the hackers — the best way to do this is to adopt the same tooling they are using and test your defenses proactively. We believe there’s a massive opportunity for a platform that harnesses the power of AI to build white-hat AI hackers that help enterprises discover and mitigate attack vectors in their infrastructure before hackers do.

Cybersecurity solutions: get ahead to stay ahead

In September 2024, Dane Vahey, Head of Strategic Marketing at OpenAI, shared that the cost per million tokens had fallen from $36 to $0.25 over the previous 18 months. As a result, he argued, “AI technology has been the greatest cost-depreciating technology ever invented.”

Parallel to falling costs is the rising ability of AI agents to complete longer and longer tasks. One study found, for example, that “Within 5 years, AI systems will be capable of automating many software tasks that currently take humans a month.”

These benefits, however, help good actors and bad actors alike. As we’ve covered here, the asymmetry often tilts in favor of the attackers. We believe organizations have to be proactive about putting better cybersecurity defenses in place today.

AI-enabled hacking is novel today, but will inevitably become cheap, common, and accessible. The further you get ahead now, the longer you can stay ahead.

The content here should not be viewed as investment advice, nor does it constitute an offer to sell, or a solicitation of an offer to buy, any securities. The views expressed here are those of the individual Lightspeed Management Company, L.L.C. (“Lightspeed”) personnel and are not the views of Lightspeed or its affiliates; other market participants could take different views.

Unless otherwise indicated, the inclusion of any third-party firm and/or company names, brands and/or logos does not imply any affiliation with these firms or companies.

Authors