06/09/2023

Enterprise

Building With Contextual—Enterprise-Ready LLMs For All

Announcing Lightspeed’s latest investment in the AI space

Large language models (LLMs) have taken the world by storm in recent months, and for good reason — LLMs are uniquely suited to comprehend and extract insights from unstructured data, outperforming previous machine learning approaches that either didn’t work at all or were extremely brittle.

70% of enterprise data is unstructured in nature (text, images, video, etc). Further, the growth of remote communication and hybrid work has significantly accelerated the generation of unstructured data via applications like Slack, Zoom, and Google Docs. However, unstructured data is difficult to process and analyze by traditional information processing and business intelligence systems.

At Lightspeed, we think LLMs represent a significant “unlock” for AI in the enterprise, enabling businesses to generate many more insights from their troves of unstructured data. Existing operational and analytical processing systems were only tackling the tip of the large iceberg of enterprise data hidden under the surface. Now, due to LLMs, the vast majority of enterprise data has suddenly become addressable.

However, with great power comes great responsibility… and hallucination.

Most LLMs are trained on a general corpus of internet data, leading them to generate inaccurate responses to specific fact-based questions. In fact, most foundation models are not specifically trained to retrieve factual information, leading to poor performance in accuracy-sensitive contexts.

The flexibility and creativity of LLMs is therefore a double edged sword: if not reigned in, they can easily get too creative, quite literally making up plausible sounding “facts” in response to user queries. This is a non-starter for most serious business use cases and will inevitably slow adoption of generative AI if not addressed.

That’s not all. In the flurry of activity and interest around generative AI, a number of other thorny issues have emerged:

- How does source attribution work in a generative context?

- Large language models are, well, large — can the typical enterprise run such a model themselves?

- Facts change. How do we ensure models keep up with an ever-evolving world?

- AI is incredibly data-hungry — how can it be harnessed in a privacy-preserving way?

- Are these things compliant? How would you know?

While most are just waking up to this harsh reality, a few forward-thinking individuals saw this coming and have been working on practical, research-backed solutions to these problems. Contextual AI’s founders, Douwe Kiela and Amanpreet Singh, have been training sophisticated large language models for much of their professional careers, advancing the state of the art through their well-cited research at places like Meta (Facebook AI Research), Hugging Face, and Stanford University.

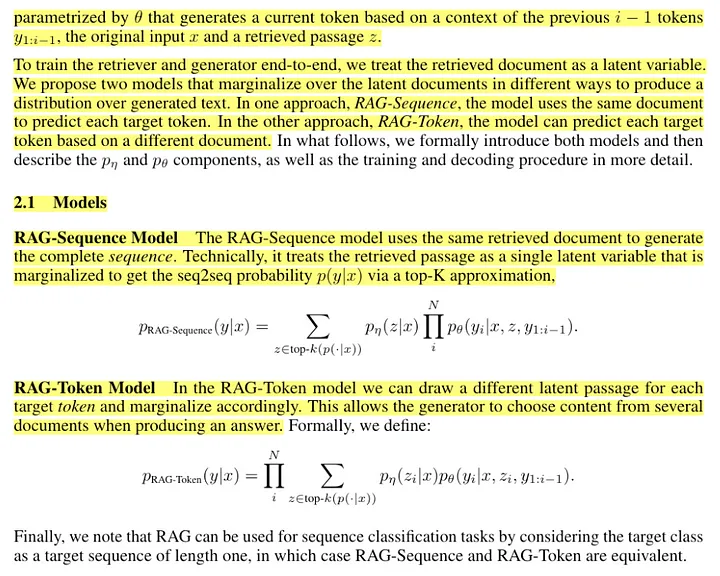

In 2020, Douwe’s team at Facebook AI Research published a paper titled “Retrieval-Augmented Generation for Knowledge-Intensive NLP Tasks” in which they proposed a simple but powerful idea — LLMs become substantially more powerful when connected to external data sources, so why not make information retrieval a core part of the training regimen? This leads to models which ground themselves in underlying data sources and generate factual responses much more reliably.

I read a lot of AI research, and I distinctly remember coming across the paper in 2020 and thinking — “this is really interesting, someone should do something with this!” I took copious highlights and made a mental note to return to the idea in the future.

Needless to say, the pitch for Contextual AI immediately clicked when I met Douwe and Aman years later. While their research record alone is impressive, they’re also laser-focused on real-world applications and customer pain points, traits not always seen among researchers.

We are still in the early days of the AI revolution, and nascent, off-the-shelf LLMs aren’t yet ready for prime time. For AI to achieve its promise, we need a platform for enterprise-ready LLMs.

Contextual AI is that platform. With Contextual AI, businesses will be able to build, train, and deploy their own customized LLMs, all in a compliant, privacy-preserving way and with fundamentally better performance.

At Lightspeed, we’ve backed next-generation enterprise technology businesses since our earliest days, and foundational architectural innovation has always been a core part of the thesis behind our most successful investments. Contextual AI is no different.

We at Lightspeed are excited to partner with Douwe and Aman as investors in their $20M seed round. We’re massive believers in their mission to make enterprise-ready AI a reality, and we can’t wait to see what they do.